Volumes explode due to extreme growth rates

It is no secret that the current availability of broadband connectivity at relatively low cost invites people to stream video’s, create and share pictures and presentations, and work online. We are also absorbing news, videos, movies, facts, and figures from globally available streaming services. Scientists are collecting data from research, sensors, camera’s, microphones, GPS devices, vehicles, and even satellites and rockets circling in the universe somewhere.

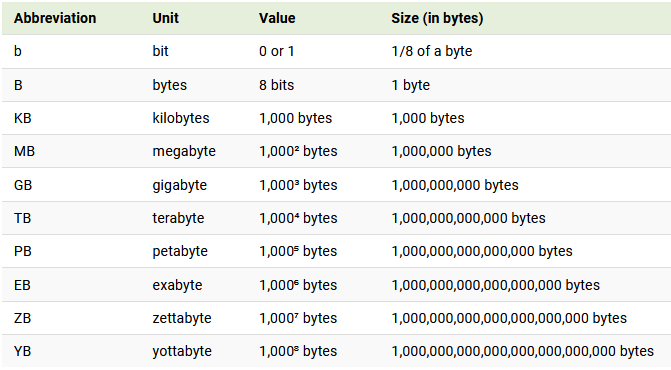

While almost everyone is familiar with bits, bytes, kilobytes, megabytes, gigabytes, terabytes, and probably even petabytes, what comes next are exabytes (1000 petabytes), zettabytes (1000 petabytes), and yottabytes (1000 zettabytes).

Growth rate elements

Apart from multiplying existing sources, there are also archives, and back-up versions in one or more locations. These are all additional versions of the original data at the source. Remember this is in addition to what we explained about multiplying sources during daily use. These are all inhouse reasons for exponential growth figures.

In case your organisation is part of a supply chain or ecosystem, we can imagine that the multiplication, archiving and back-up procedures are copied there again, when using your data or exports of it. The way we share data today is no longer maintainable, just because of the exponential growth that comes with exporting and importing data in various systems and locations. We need technology that enables data visiting at the source, like Linked Data and FAIR Data.

Facts in publications

Here you can find the various publications on data volumes and growth rates:

- Visual Capitalist, “How Much Data is Generated Each Day?” click here to find out

- Forbes, “How Much Data Do We Create Every Day?” click here for anwers

- PC Reviews, “90% of the Big Data We Generate is an Unstructured Mess.” click here for details

- IDC, “80 Percent of Your Data Will be Unstructured in Five Years” click here for details.

Figures extracted from Facts

Some figures, extracted from the presented sources:

- Worldwide data is expected to hit 175 zettabytes by 2025, representing a 61% CAGR,

- 51% of the data will be in data centers and 49% will be in the public cloud,

- 90 ZB of this data will be from IoT devices in 2025,

- 80% of data will be unstructured by 2025,

- There will be 4.8 billion internet users by 2022, up from 3.4 billion in 2017,

- 200 billion devices are projected to be generating data in the IoT trend, by 2020,

- 90% of all data in existence today was created in the past two years.

Learning points:

We can conclude that data volume and growth rates are directly related to the expansion of the following ingredients:

- Number of users with internet access,

- Number of devices with online capabilities,

- Data types with large volumes, like video, audio, movie,

- Interoperability in ecosystems and supply chains,

- Inefficiency in collecting, storing, archiving, back-up, and sharing

- Inefficiency in finding, accessing, re-using, processing and presenting

- Regulations for privacy protection, data protection, accountability, and transparency,

- Streaming data providers, for news, audio, video, movies,

- Available broadband streaming capacity, fixed and mobile,

- Low(ering) cost for storage and processing capacity.